This is the first of two articles written especially for Ada Byron’s 200th birthday.

A Boolean variable is a variable which can take only two values, namely True (1) and False (0). A Boolean function is a function which takes some number of Boolean variables as inputs and produces a single Boolean variable as an output. The most intuitive way to represent a Boolean function is a truth table:

| A | B | A and B | A or B | A xor B |

|---|---|---|---|---|

| 0 | 0 | 0 | 0 | 0 |

| 0 | 1 | 0 | 1 | 1 |

| 1 | 0 | 0 | 1 | 1 |

| 1 | 1 | 1 | 1 | 0 |

All functions in the truth table above have 2 inputs; it is not difficult to show that there are only 16 2-input Boolean functions. However, as the number of inputs increases, so does the number of functions:

| # of inputs | # of functions |

|---|---|

| 0 | 2 |

| 1 | 4 |

| 2 | 16 |

| 3 | 256 |

| 4 | 65 536 |

| 5 | 4 294 967 296 |

| 6 | 18 446 744 073 709 551 616 |

Frankly speaking, dealing with 18 quintillion functions can be quite nasty and unfulfilling, so it is reasonable to ask the following question: can we a make a toolkit of a few functions which we could then use to create all other functions*? In the rest of the article I intend to answer this question.

* Bureaucratic remark: I meant all functions with at least one input.

Without more ado, I will take an arbitrary Boolean function:

| A | B | C | F(A,B,C) |

|---|---|---|---|

| 0 | 0 | 0 | 0 |

| 0 | 0 | 1 | 0 |

| 0 | 1 | 0 | 1 |

| 0 | 1 | 1 | 0 |

| 1 | 0 | 0 | 1 |

| 1 | 0 | 1 | 0 |

| 1 | 1 | 0 | 0 |

| 1 | 1 | 1 | 1 |

This function returns True if A is False, B is True and C is False, or if A is True, B is False and C is False, or if A, B and C are all True… Wait! That sentence is basically an expression in Boolean algebra! Let us rewrite it in formal manner:

F(A,B,C) = ((not A) and B and (not C)) or (A and (not B) and (not C)) or (A and B and C)

So far so good. We expressed F using only “and”, “or”, and “not”. It should be clear that we can do the same thing for every function with at least one input. Therefore, we now have a universal toolkit we were looking for. Mathematicians call such toolkits “functionally complete sets of functions”. However, {“and”,”or”,”not”} is not what is called a minimal functionally complete set, which means that we can remove one function from the set and it will still be functionally complete. More precisely, it is possible to define “or” in terms of “and” and “not”:

A or B = not ((not A) and (not B))

…implying that “or” is redundant. We are left with the set {“and”,”not”}, which is minimal. The question now is, can we do better? Can we make a functionally complete set with a single element? Even though it may seem impossible at first, the answer is yes, as demonstrated by “nand” function:

| A | B | A nand B |

|---|---|---|

| 0 | 0 | 1 |

| 0 | 1 | 1 |

| 1 | 0 | 1 |

| 1 | 1 | 0 |

not A = A nand A

A and B = not (A nand B)

Besides “nand”, there is only only one 2-input Boolean function possessing this property, and it is “nor”, which returns True if and only if both inputs are False. Each of these functions can be easily implemented in real processors. However, there is a case when these functions are inconvenient, which is explained in the next section of my article.

Suppose you are a cellular automata nerd and you want to prove that your cellular automaton is Turing complete. There is no doubt that having a functionally complete set of functions will greatly help you achieve this task. And here you run into a small problem: in cellular automata, True is usually some “real” thing, like a spaceship or a Herschel in a track. “nand” and “nor” output True when both inputs are False, meaning that they should get an output from thin air, like a magician getting a rabbit from an empty hat. It is possible, but the resulting circuit would be very clumsy. As a result, most cellular automatists opt for a different set of functions, namely {True,”andnot”}:

A nor B = (True andnot A) andnot B

It may sound a little strange that I called True a function, but indeed it fits the definition: it has 0 inputs (any programmer will tell you that there are functions with zero inputs) and one output. In order to show why {True,”andnot”} is such a convenient set, let us take a random cellular automaton, namely my very own Alpha Particles, and try to demonstrate that this CA is Turing-complete. So, what do we need?

A signal to transfer information

No problem, we have one:

x = 1, y = 1, rule = Alpha-1

B!

A way to move a signal as we want

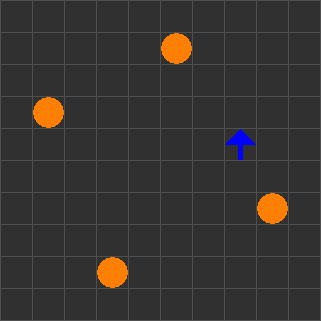

This is not too difficult, either. A 90-degree reflector can be constructed by placing a single “ball” (state 1 cell) near the path of an arrow. In the example below, the arrow is turned left:

x = 2, y = 6, rule = Alpha-1

A5$.B!

A way to duplicate a signal

Place a ball in a slightly different position:

x = 3, y = 6, rule = Alpha-1

A5$2.B!

“andnot” gate

And here comes the beautiful thing. Unlike “nand”, which would require some complicated circuitry, “andnot” is as easy as bumping two signals into each other:

x = 3, y = 4, rule = Alpha-1

C3$2.B!

They annihilate, so signal A comes out if it was present and signal B was not. You should now see why “andnot” is so cool: destructive collisions exist in almost every system, and where there is a destructive collision, there is “andnot”. For example, a billiard ball goes along a straight line if it is present andnot other ball kicks it; a pig gets out of a tunnel if it goes in andnot meets a crocodile there (the latter model would give rise to a wonderful computer).

A way to generate True const… Stop!

Actually, we do not need to invent a special way to generate truth, as we can use the components we already have. Just put three reflectors and one duplicator into a loop that will repeatedly emit signals:

x = 8, y = 8, rule = Alpha-1

4.A2$A$6.B2$7.A2$2.A!

And here it is. Functional completeness. And, if we are allowed to create infinite patterns, it also means Turing completeness. If, on the other hand, infinitely large patterns are disallowed, then functional completeness doesn’t imply Turing completeness. In such case we also need…

Memory

First of all, I should clarify what memory is. The memory of your computer is not the memory we want to obtain. Neither is the memory of your brain. When I said “memory”, I meant a variable which can be set to the desired value (maybe very inefficiently), which can be read (if you can only find out whether it is zero or non-zero, it is OK), and which, most importantly, can take infinitely many values. The simplest type of memory I know of is the so-called sliding-block memory. It consists of an object that can be moved along a straight line. Its position represents a non-negative integer, and there are only three allowed operations:

- Increment that integer by one.

- If it is non-zero, decrement it by one.

- Check whether it is zero.

In Alpha Particles, sliding-block memory is really easy to create. A ball can be pushed with one arrow and pulled with two arrows as in the image below. Check for zero is trivial, since we have an arrow-turning reaction from above (again we encounter “andnot”: an arrow passes straight if it exists andnot encounters a ball).

x = 7, y = 7, rule = Alpha-1

A4.A5$B3.B$6.B!

The Turing completeness follows.

P. S. All functional completeness stuff in this article was proven relatively rigorously. On the contrary, when talking about Turing completeness, I avoided any proofs. Really, why does functional completeness imply Turing completeness if the patterns can be infinite? How does the clumsy sliding-block memory help us so much? Those proofs were omitted on purpose: they are so complex that they deserve their own long article. Maybe I will write that article in the future, maybe not. For now, anyway, let us just use the standard phrase: “the scientists proved that…”

P. P. S. And for those of you who want an exercise, why not try to express the function F from the beginning of the article using only “nand”?